Anthony Cecchini is the President and CTO of Information Technology Partners (ITP), an ERP technology consulting company headquartered now in Virginia, with offices in Herndon. ITP offers comprehensive planning, resource allocation, implementation, upgrade, and training assistance to companies. Anthony has over 20 years of experience in SAP business process analysis and SAP systems integration. ITP is a Silver Partner with SAP, as well as an Appian, Pegasystems, and UIPath Low-code and RPA Value Added Service Partner. You can reach him at [email protected].

Anthony Cecchini is the President and CTO of Information Technology Partners (ITP), an ERP technology consulting company headquartered now in Virginia, with offices in Herndon. ITP offers comprehensive planning, resource allocation, implementation, upgrade, and training assistance to companies. Anthony has over 20 years of experience in SAP business process analysis and SAP systems integration. ITP is a Silver Partner with SAP, as well as an Appian, Pegasystems, and UIPath Low-code and RPA Value Added Service Partner. You can reach him at [email protected].

Technological advancement enables seamless data generation in an organization. Although more data implies greater decision-making insights, complexities arise because you’ll require sophisticated systems to handle the enormous data sets. In addition, multiple data sources within an enterprise landscape require you to monitor all data platforms frequently for the latest trends and updates.

A data fabric offers the best solution for such data complexities. Through a unified data environment, you can streamline your processes into a seamless layer integrating various departments in the organization.

Discover the key functionalities of a data fabric as part of your Data Architecture solution and how it works. For a quick recap of what a Data Architecture is, see our blog Data Architecture – Understanding The Benefits

A data fabric is a unified architecture that employs artificial intelligence to integrate, enrich, and deliver real-time data for various uses. The platform ensures timely data delivery to support analytical and operational tasks since it incorporates primary techniques, including data governance, pipelining, integration, catalog, and orchestration. According to experts, a data fabric is a fast-rising trend and needed if you handle enormous, complex data in your organization.

Data fabric architecture consists of many components collaborating to deliver data to its rightful place. Each component plays a vital role in extracting and delivering data insights across the enterprise. Here are the seven fundamental components of the data fabric architecture:

Data Sources (Internal/External)

Data sources generate relevant information for processing, storage, and use. A data source may be external or internal, depending on your preferences. For instance, data may originate from human resource information systems, resource planning software, document submission systems, and client relationship management tools within your organization. Alternatively, you may obtain data from the social media and third-party repositories.

Data Analytics and Knowledge Graphs

Most sources generate unstructured or semi-structured data. For this reason, you need a system to change the data to a simple, structured format. Data analytical tools and knowledge graphs convert data sets into coherent formats for easy processing. Through data analytics, you’ll also view and understand the complex relationships of data for insight generation.

Artificial Intelligence Algorithms

You can consistently monitor all organizational data using Artificial Intelligence (AI) and Machine learning (ML) algorithms. In turn, you’ll reduce processing time, yielding faster data insights. However, before using advanced algorithms, align all the data to the intended applications and ensure security compliance.

User Integration Capabilities

A robust data fabric has built-in integration capabilities for connecting with user delivery interfaces. The architectural backbone contains application programming interfaces (APIs) and software development kits (SDKs) that play this vital role alongside built-in connectors. Your IT team can activate the built-in capabilities using a do-it-yourself (DIY) procedure or an automated self-service tool.

Consumption Layers

A data consumption tool allows the exploration of various data sets from a given source. It gives the data team a simple interface to interact with data from the front end. The most common consumption layers include virtual assistants, chatbots, embedded analytical tools, and real-time monitoring dashboards to keep an eye on the processing activities.

Transportation Layers

A data fabric must have an efficient means to move data. The transport layer, therefore, moves data between points on the platform. However, you can design a transport layer with end-to-end encryption limiting unauthorized access to data. The layer should also ensure compression efficiency by preventing data duplication when moving between points.

Hosting Environment

The data fabric framework isn’t complete without a reliable hosting environment. Depending on your configuration preferences, the host can be on-site, cloud-based, multi-cloud, or hybrid. An external hosting platform is usually the best because you can access advanced cloud data management tools through the vendor.

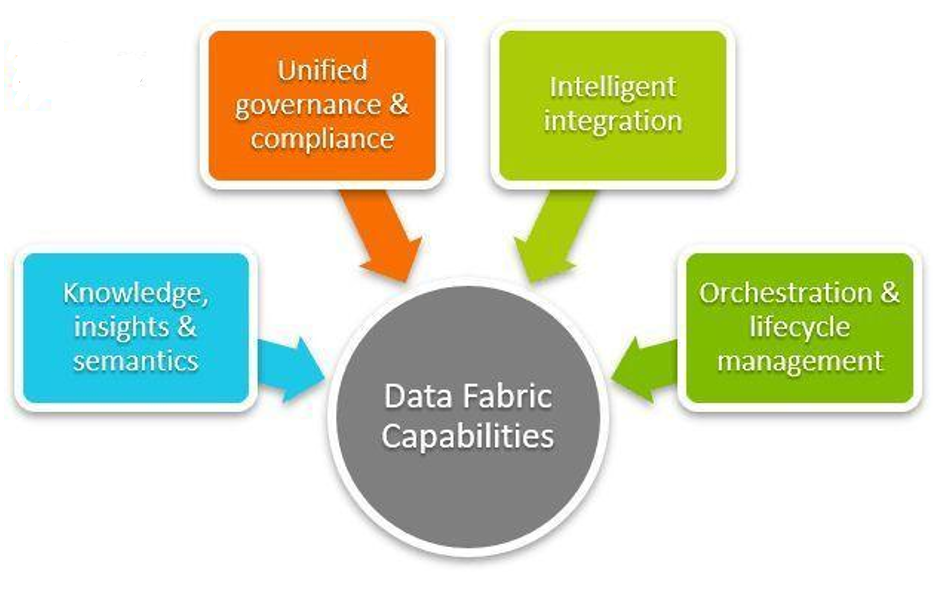

Data fabrics boast incredible capabilities for solving business needs. Although your data needs will influence its architectural design, a good platform should perform should accomplish functional and non-functional tasks, including;

Data Engineering: for building reliable data pipelines.

Data Catalogs: for classifying and representing data assets.

Data Governance: for quality assurance and security compliance.

Data Integration: for data retrieval and delivery to the target.

Data Orchestration: involves defining a data flow sequence from the source to the target.

Non Functional Capabilities

Data Distribution: A robust data fabric should be capable of deployment in varying operating environments. Moreover, it should allow virtualization techniques to retain data integrity.

Data Safety: If you constantly handle data, you require end-to-end encryption for privacy. Your data fabric should, therefore, have user credentials accessible only to the data team and other authorized users.

Data Scaling: You may want to upscale or downscale data volume based on the need. A good fabric must therefore enable data manipulation for analytical purposes.

Data Accessibility: the platform should enable fast and easy data access regardless of type, format, or storage platform.

Data fabric installation comes with a fair share of benefits. You can access and share vital data within a distributed environment and solve complex data challenges. Data fabric also offers the following unique benefits to your organization:

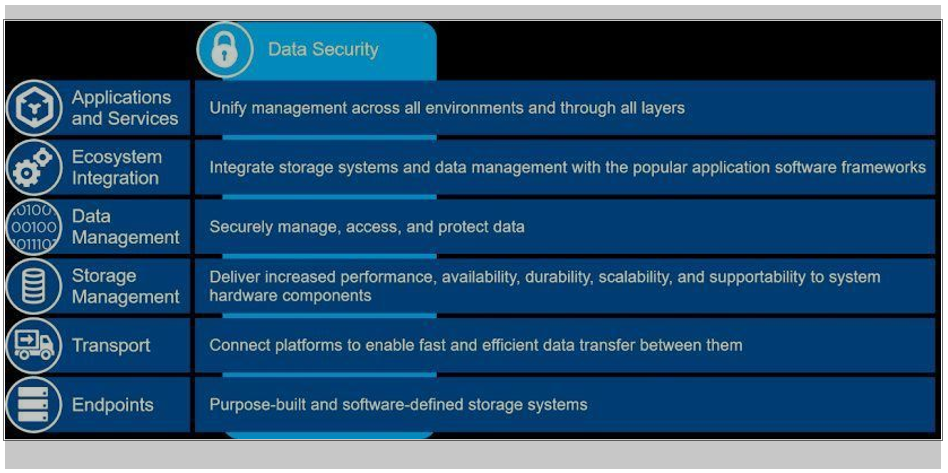

High Data Security

Data privacy is a major security concern for most organizations. However, data fabric ensures security and regulatory compliance because of its unique ecosystem. As a result, it eliminates unauthorized access due to a multi-key encryption system.

Smart Data Integration

Advanced data handling techniques provide a means for intelligent integration. You can gather information from various sources, classify data sets, and integrate built-in capabilities into the system. You’ll eliminate data silos and streamline the data handling process.

Improved Processing Efficiency

You can improve efficiency in data processing using a data fabric. For instance, you can shorten the time for gathering insights and making decisions. You can also enable a 360-degree view of the enterprise for selected operators in the data team.

Unified Database System

A data fabric ensures a unified database arrangement. As a result, you can standardize data access, enabling remote applications from all locations. Spreading databases also helps different apps share a common source without interference.

Enhanced Data Management

Data fabric enhances your organization’s data management capabilities. You can easily retrieve and enrich all vital information for various uses. In addition, you can transform data sets and share them with internal and external stakeholders.

Deeper Insights

Data fabrics can reveal how customers use your services. You can cross-reference critical data points and analyze relationship networks through a single view option. That way, you can compile customers’ data to create strategies for enhancing their experiences.

Data fabric design is a relatively new concept, but its integration capability makes it an ideal tool for various purposes. Here are the common applications and use cases of data fabric:

Preventive Maintenance

Data fabric technology is a vital tool for preventive maintenance analysis. It gives insights from different data points, hence predicting the maintenance cycle. It can also help you plan for the equipment, personnel, spares, and materials so you reduce downtime.

Elimination of Data Silos

Data silos affect productivity but are hard to remove because they use app-dependent databases. However, data fabric technology is a great relief as it can decouple data from an application. Your data managers can, therefore, eliminate silos despite using point-to-point integration on the architecture.

Data Accessibility in Institutions

Educational and healthcare institutions rely on data networks to store and share information for their research and innovation. However, complications arise due to silos building up on the set-up. Data fabrics, instead, provide a reliable environment for information sharing since they don’t require many redesigns of the IT infrastructure.

DoD – Speed up Attacks or shorten the Kill Chain

The need to identify targets, communicate the details and “attack” or destroy could be described as a somewhat “timeless” reality of war, yet current technology is now bringing this process to new, breakthrough levels of speed and efficiency.

A robust data fabric should follow well-designed protocols to achieve the best results. That way, you can optimize and effectively manage your data resources. Below are five tips for implementing successful data fabric architecture.

Prioritize Data Integration Layers

A common shortfall when designing a data fabric is that you may end up with a central repository without functional capabilities. That’s essentially a data lake because it contains the major components of data fabric architecture. However, you’ll also require APIs that deliver data insights to the front-end users for better results.

Comply with Regulatory Requirements

Robust data fabric architectures eliminate the risk of data exposure to unwanted users, but it all begins with regulatory compliance. Different data types are subject to regulatory jurisdictions and governing laws. You should, therefore, automate policy notifications so you don’t breach the relevant laws.

Leverage Open Source Technology

A good data fabric allows an easy extension and integration of associated tools. That’s why an open-source solution is always a game changer during its design. In addition, implementing data fabric architecture requires massive financial input. As a result, an open-source system reduces reliance on a single vendor and helps you retain your financial investment even if you shift allegiance to a different vendor.

Activate Native Code Generation

You can set the data fabric to generate native codes automatically in different coding languages. You can then leverage the codes to integrate new systems onto the fabric. Native code generation also helps you add data systems speedily without outsizing your investment. However, native codes require pre-built connectors for easy implementation.

Embrace Graph-based Analytics

Data analytic tools use knowledge graphs to visualize metadata. Graph analytics are better than relational databases since they enrich the data with text strings and semantic contexts. Therefore, you should use graph-based analytics for data correlation to unearth relevant insights.

Summary

Data fabric is a reasonably new approach to data handling. However, its fast growth and implementation by data managers are key pointers to its effectiveness. The fabric reduces data breaches, improves efficiency, enables smart data integration, and better insights. With the ever-evolving field of data science, there really isn’t a better way to manage enormous data sets than having a data fabric.