TAKE NOTE (Insights and Emerging Technology)

|

Interested in learning more about RPA? Download our FREE White Paper on “Embracing the Future of Work”

UNDER DEVELOPMENT (Insights for Developers)

S/4 BTP Cloud-based Enhancements using Side-by-Side Extensibility Part 3

Intro

In this month’s blog, we are continuing the development of our Sale to Tweet BTP extension. Last month, we completed our first step towards this task by configuring the Event Mesh to receive sales creation events and storing them within a queue to be consumed.

This month we will use the BTP ABAP Environment to detect when a Sales event occurs in our SAP system, and subsequently execute custom logic in response to these events. The ABAP environment, if you’ll recall, also served to solve two of our main problems when considering how to move our extensions to the cloud:

Problem 2: Even if we receive synchronous notifications, the point of side-by-side extensibility is to enhance the OTB SAP solution. How can we do that if the data resides in SAP?

Problem 3: Custom solutions require custom logic. If my ABAP system is on the ERP system itself, how can I execute any logic without an SAP environment?

Our ABAP environment, with the use of OData services, will address both of these problems as you’ll see shortly.

Our custom ABAP extension will be split up into three main tasks, two of which we will code in this month’s blog:

- Receive Sales events from the Event Mesh, store event data in a custom database table, and trigger follow on actions

- Once triggered by the event in step 1, reach out to our SAP On-Premise system to retrieve further details about the sales event, using OData services.

- (Saved for next blog) Craft and transmit our “Tweet” to our X/Twitter iFlow, which will create tweet with Sales information on our Twitter account.

You may notice that all three of the listed steps require connection to remote systems and/or applications. This fact highlights the most important differences when coding ABAP in the Cloud versus coding solution on-premise; components of our solution do not reside on the same machine/ cluster of networked machines. This means that communication via any “local” means, such as memory storage, local function calls, or similar methods are not possible.

Instead, the separate components of our solution communicate using RESTFul HTTP APIs, allowing the components to live and interact from decentralized locations completely remote from our core logic, and yet still operate as one distributed solution. Remote communication, however, adds an additional layer of complexity to our solution, since we now must maintain credentials, authentication protocols, etc.

Fortunately, SAP provides an elegant solution to this problem for our cloud components in the form of Communication Scenarios/Communication Arrangements. We will explain how and where to configure these artifacts later. For reasons that will be clear shortly, we will first log into our BTP ABAP environment and configure the basic details of our solution.

![]()

Developing our Custom Solution

Getting our BTP ABAP environment up and running requires a few steps, but is mostly very similar to configuring any other service in the BTP. I have included an additional FAQ section at the end of the blog for complete novices, but we will start this section of the blog as if we have a BTP ABAP environment up and running and have connected to our system via the Eclipse IDE.

Logging into our Eclipse IDE system and connecting to our ABAP BTP repository, it might feel a bit daunting trying to determine our next step. We understand that we have an Event Mesh instance out there somewhere, but the question now becomes how do we connect to this instance, listen for only our particular event, and then react to this event be executing some custom code.

SAP provides a GUI-heavy guided approach that helps up do just that in our ABAP BTP instance, and it does this by leveraging two generated artifacts:

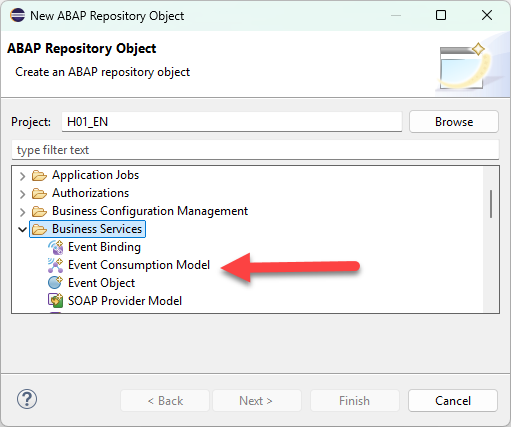

- Event Consumption Model – The event consumption model leverages an AsyncAPI file data to generate source code, data definitions, behavior definitions, ultimately an Inbound service that allows our ABAP system to listen for and process business events.

- Communication Scenario – This artifact links the inbound and/or outbound services that will be utilized in a particular “scenario”. This abstract concept allows us to loosely couple our event processing to the backend cloud system we are expecting this event from. We’ll create and loosely cover this below within our Eclipse/ADT portion of the guide, but this concept will be much clearer after we reach the ABAP administrative portion, where we will utilize this scenario to connect to our system.

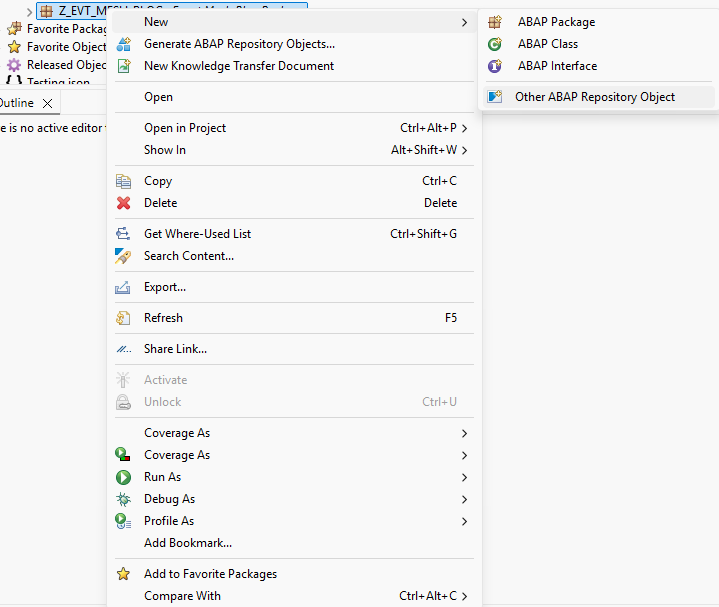

Let’s first start off by creating our event consumption model. To do this, follow the following menu path shown in the screenshot below:

When you get to the model creation screen, populate the details as required. Two things that we want to pay attention to here are…

– Dig Deeper –

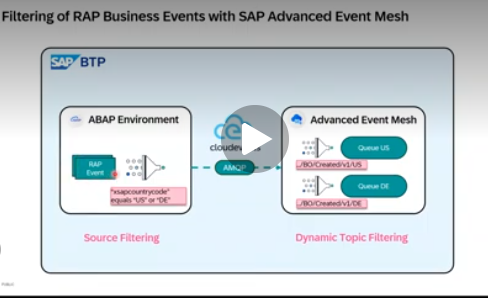

Filtering of RAP business events in SAP BTP ABAP Environment

Q&A (Post your questions and get the answers you need)

Q. How Do SAP’s ABAP Environment and Event Mesh Work

A. SAP’s BTP ABAP Environment (“Steampunk”) is quickly becoming the default place to build SAP extensions because it protects the S/4HANA “clean core” while keeping ABAP talent relevant

Instead of burying custom code inside the ERP, teams now push logic into a cloud ABAP space with modern tooling, the RAP model, and stable APIs. This matters because SAP upgrades, cloud migrations, and AI-enabled automation all depend on having a core that’s modular and clean—not weighed down by years of Z-objects and user exits. ABAP Environment gives enterprises a cloud-native landing zone for that modernization.

Event Mesh adds the missing superpower: real-time, loosely coupled communication. SAP systems (ECC, S/4HANA, SuccessFactors, Ariba, Concur) emit standard “business events” such as Purchase Order Created, Business Partner Changed, or Material Availability Updated. Instead of point-to-point API calls, these events flow into a central mesh and can be consumed by any subscriber—including your ABAP Environment apps. This turns your landscape into an event-driven architecture where extensions respond instantly to changes in the business without creating brittle dependencies.

When you pair BTP ABAP Environment with Event Mesh, you get extensions that are cleaner, faster, and more resilient. Your ABAP apps can automatically react to events—trigger workflows, update analytics models, call RPA bots, run validations, or initiate integrations. And because everything is decoupled, adding new functionality doesn’t require modifying the ERP or rewriting old integrations. This approach supports SAP’s Fit-to-Standard strategy, accelerates cloud adoption, and dramatically shortens time-to-value for new capabilities.

Think of S/4HANA and ECC as producers of business events. Think of Event Mesh as the distribution network. Think of ABAP Environment as the place where you interpret those events and take action—securely, cleanly, and upgrade-safe. The result is an SAP landscape that behaves more like a modern digital platform: modular, scalable, and ready for AI and automation services that depend on real-time signals from the business.

Cheers!